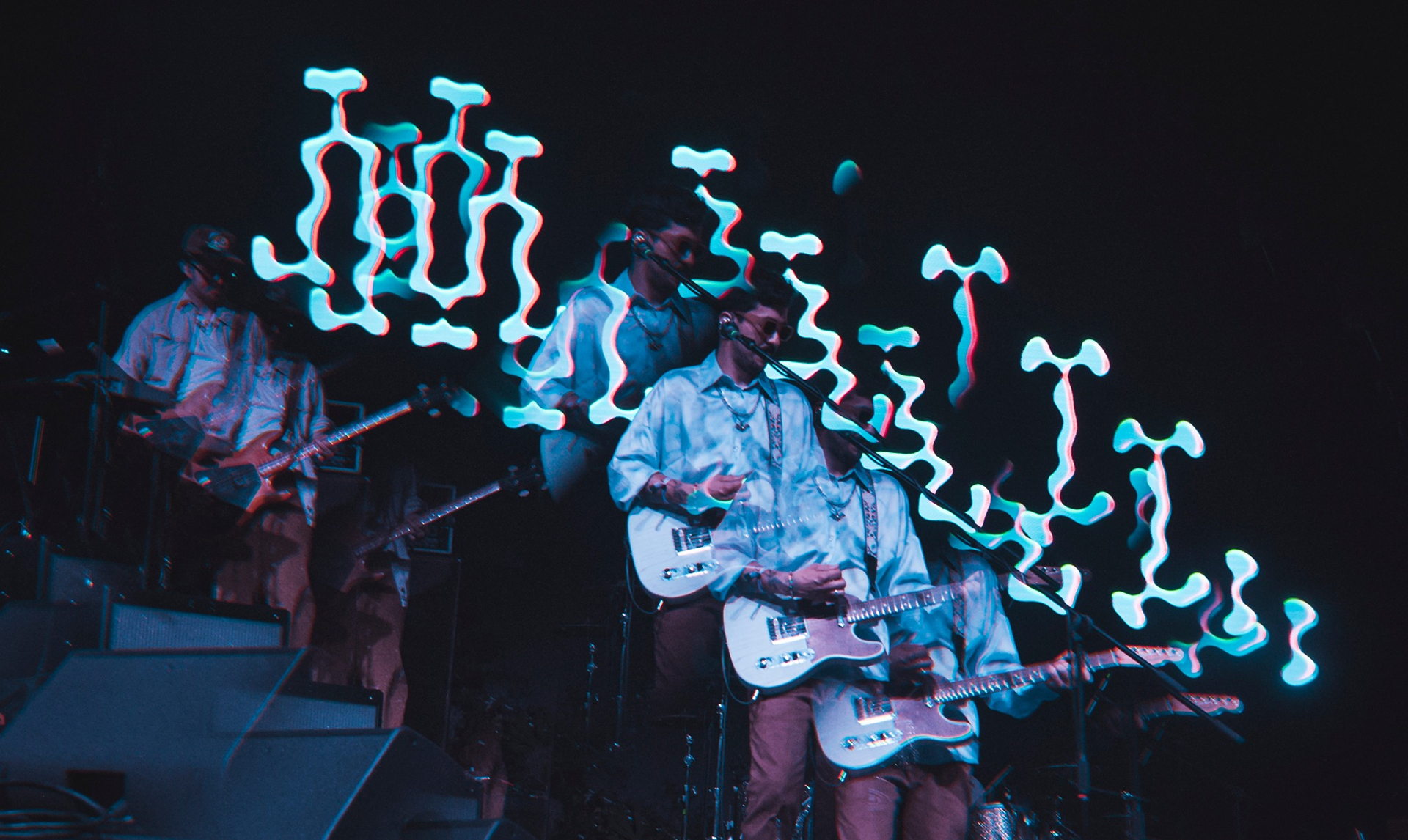

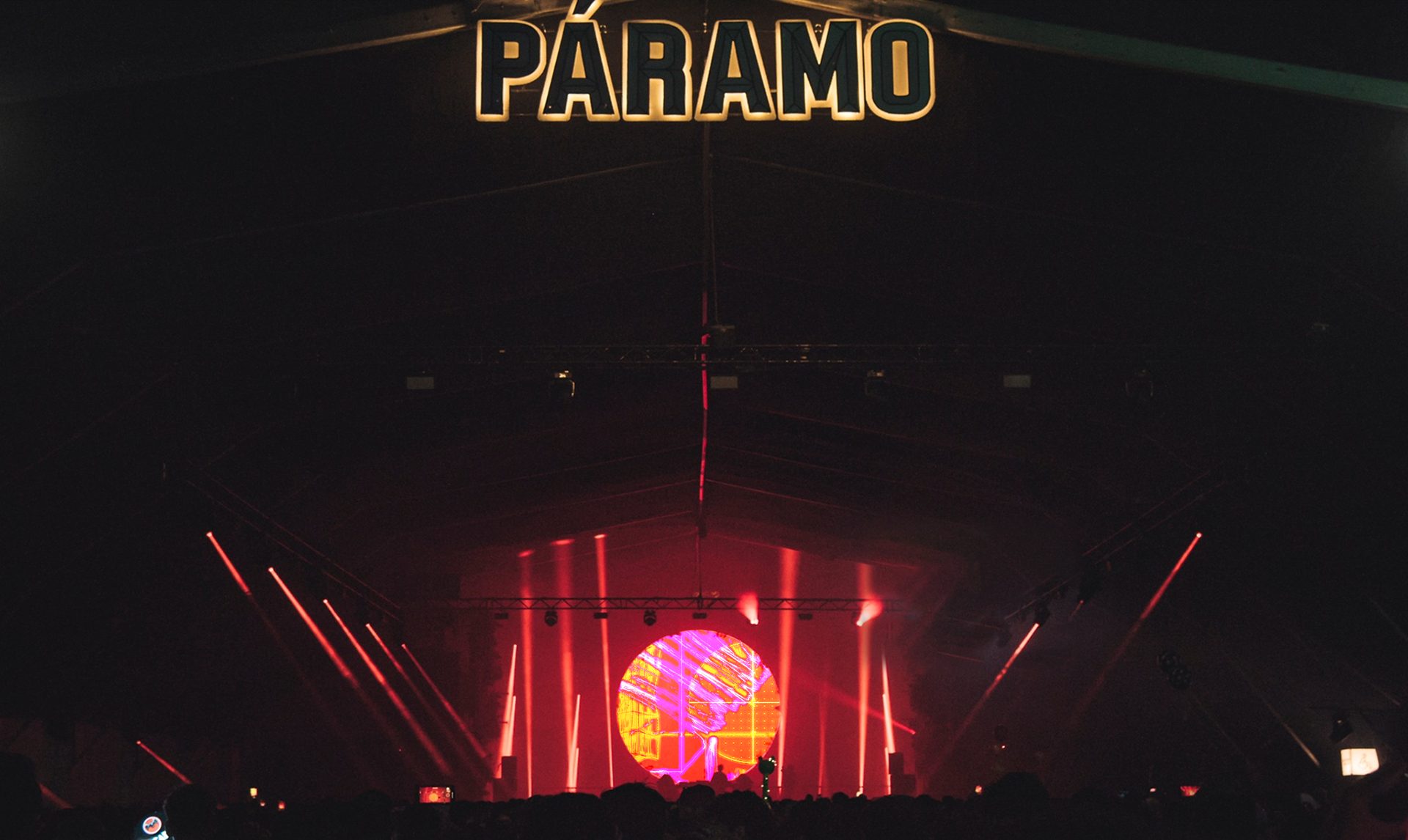

Show visual design – Sensor-Based Visuals in Real Time

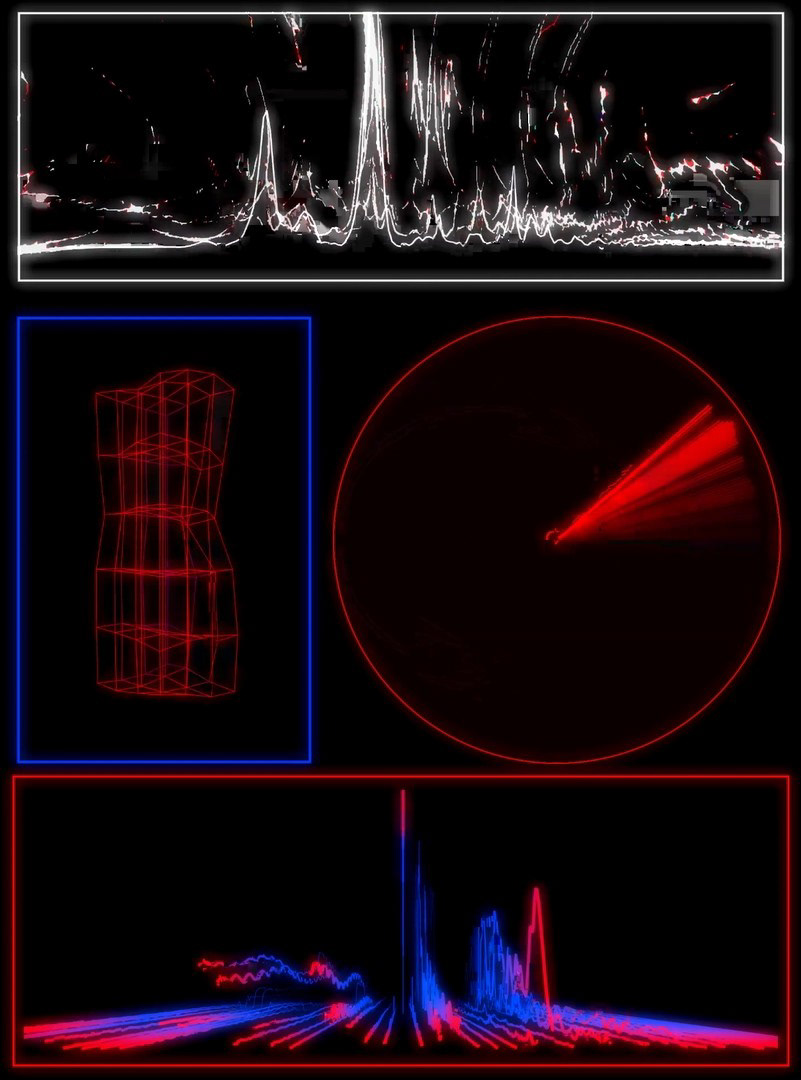

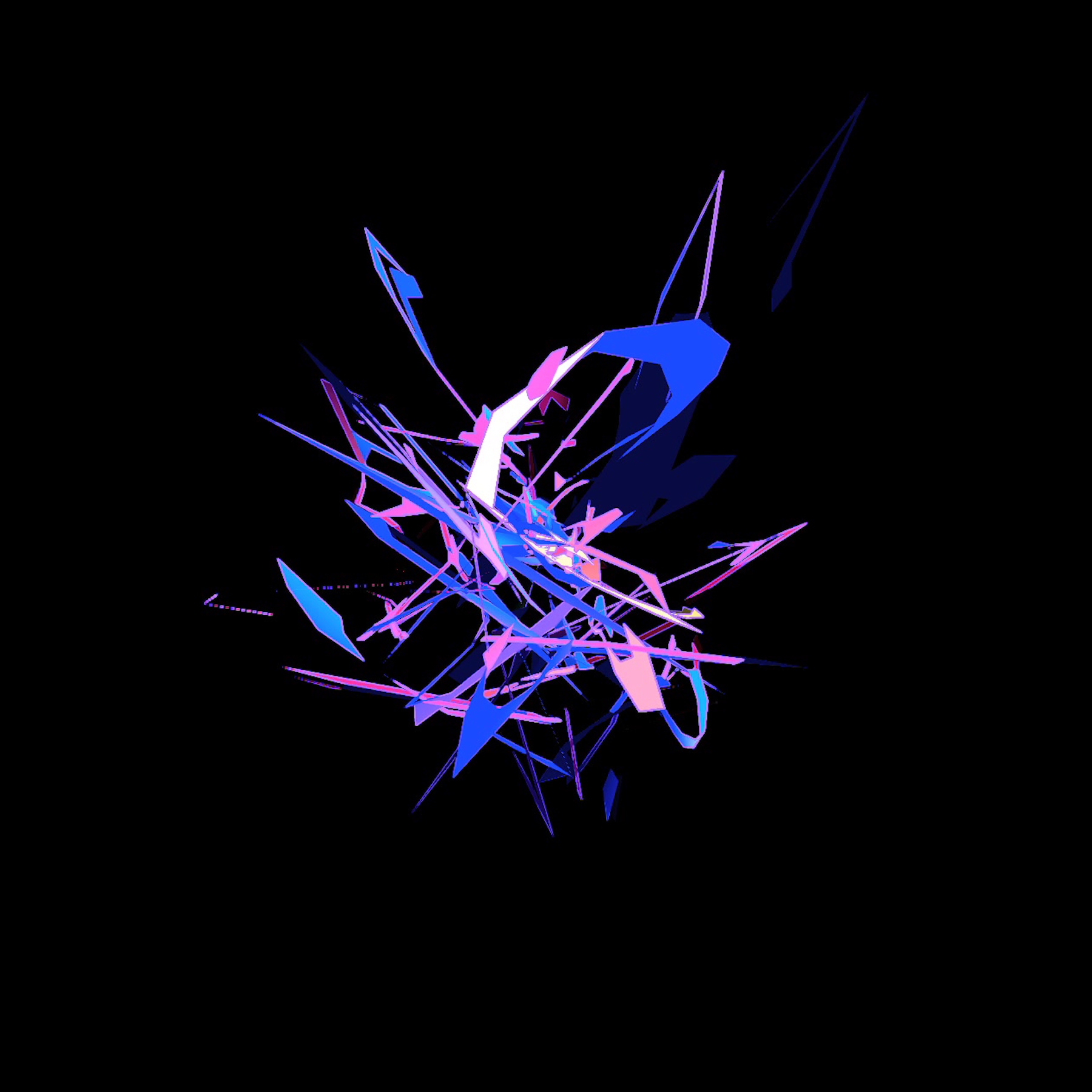

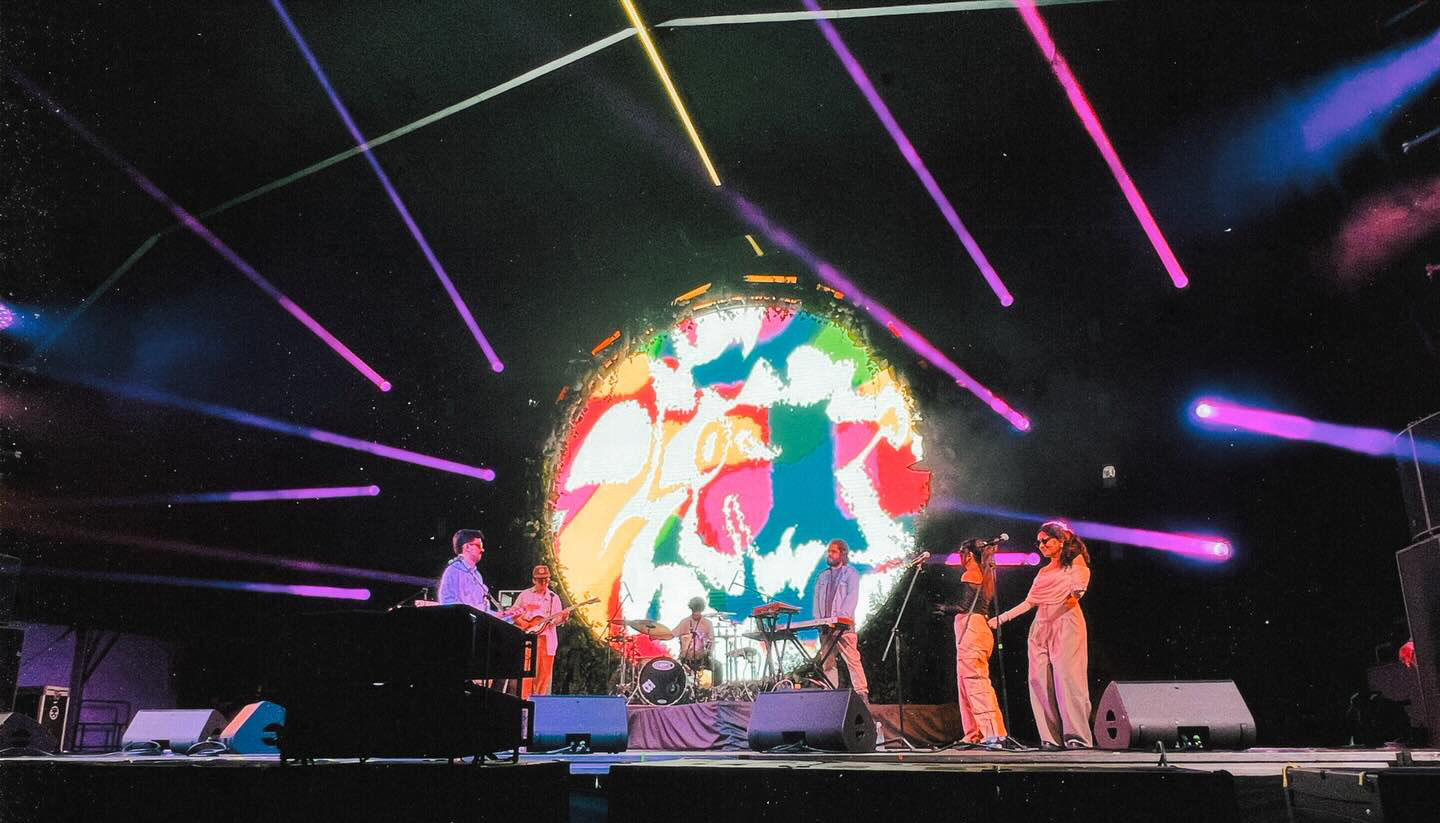

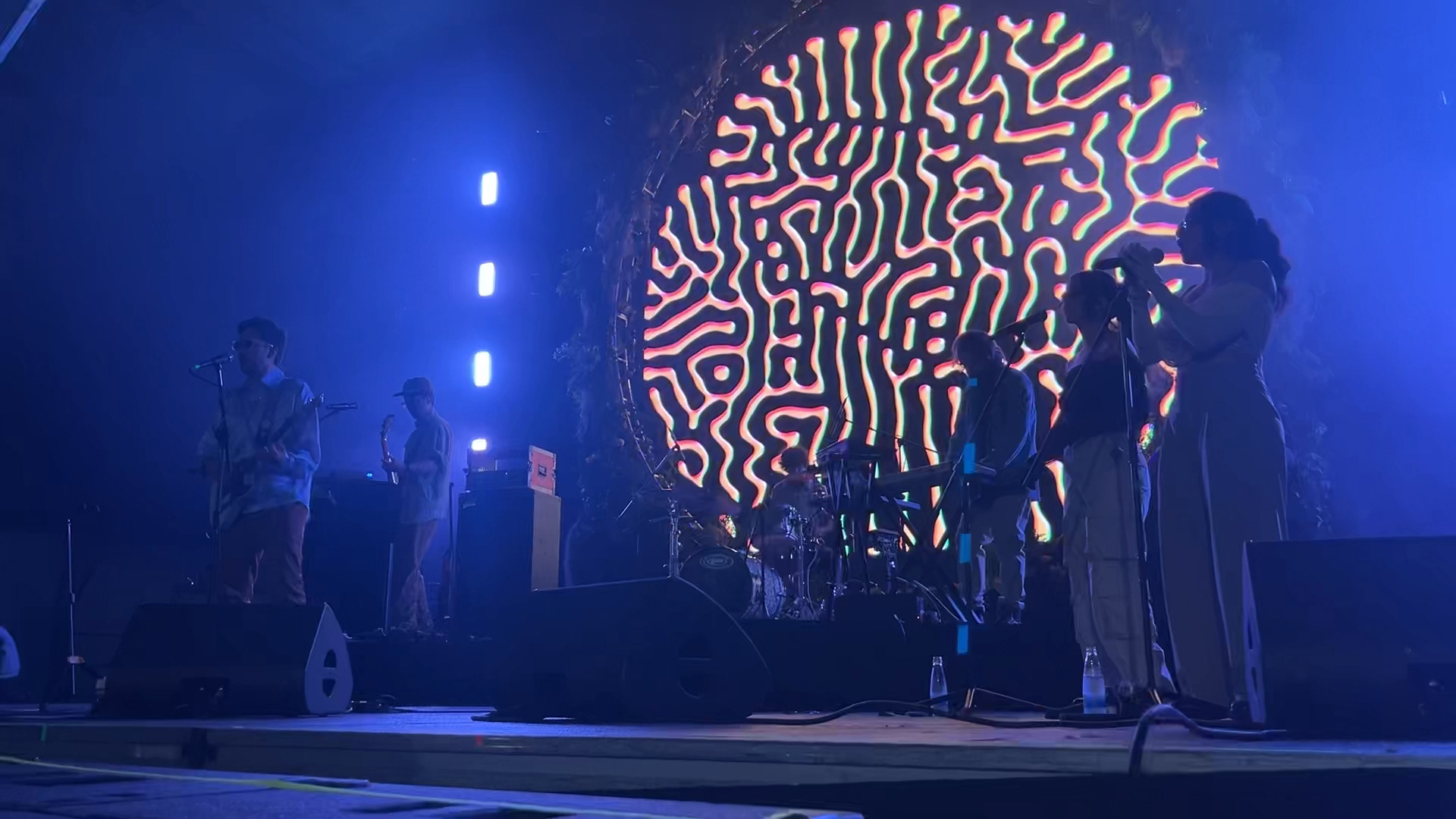

For this concert, I developed a custom visual system using TouchDesigner, blending motion-captured recordings with real-time visual mixing. The visuals were created by recording the artist’s gestures using Kinect and Leap Motion sensors. These captured movements served as the foundation for a series of generative visual clips.

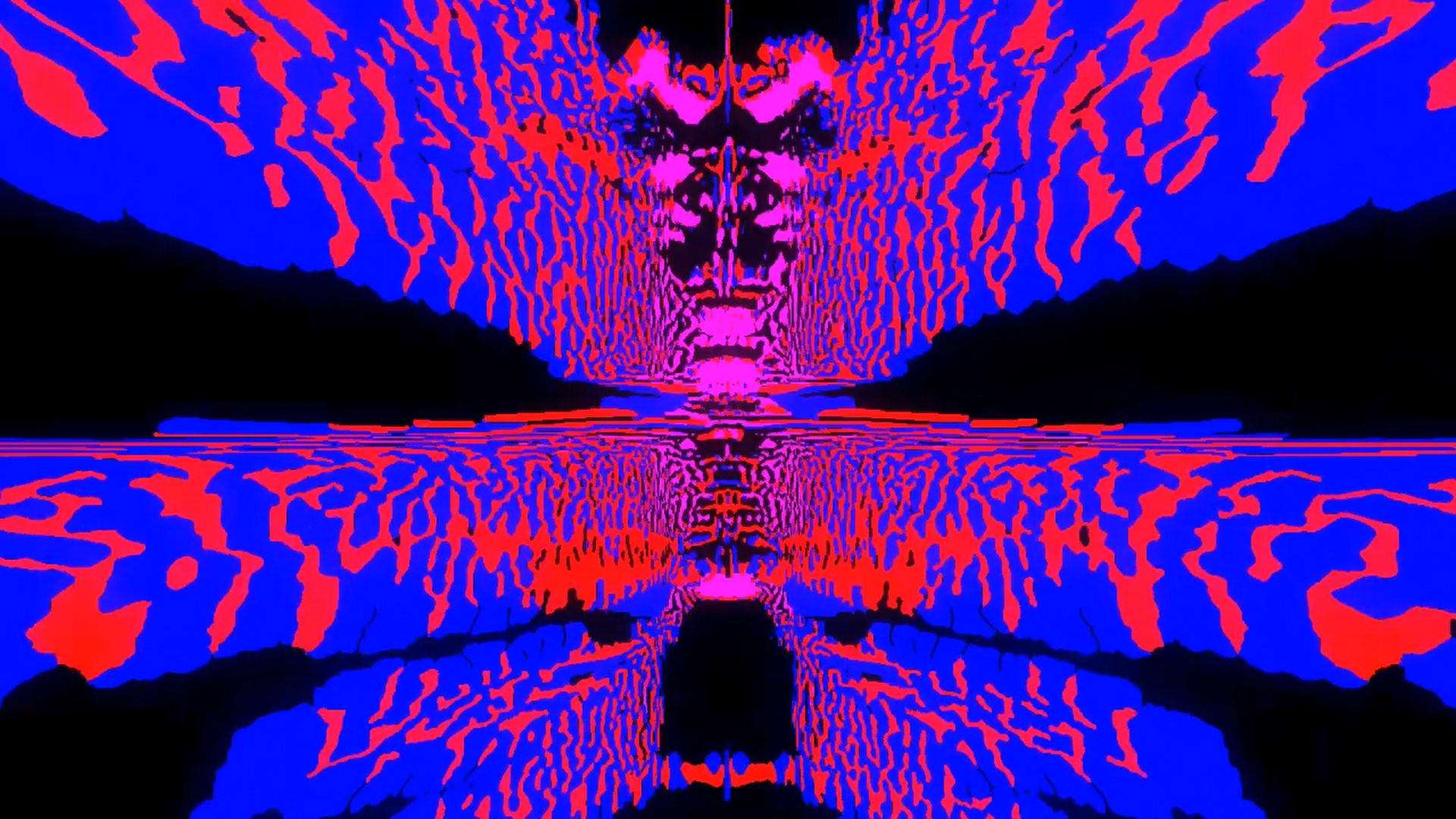

During the live show, I performed a real-time mix of these visuals, using custom-built parameters coded in TouchDesigner to manipulate timing, color, rhythm, and structure.

This project explores the expressive potential of motion capture and creative coding in live performance settings—bringing together movement, music, and generative design into a cohesive, immersive atmosphere.